Tightening-in tightening machine no shooting is probably as simple as wearing a large sign that says ROBOT, at least until OpenAI, an Elon Musk-backed research outfit, trains their recognition system without recognizing established objects on some scribbles from Sharpie.

OpenAI Researchers published work last week on the CLIP neural network, their state-of-the-art system to allow computers to recognize the world around them. Natural networks are machine learning systems that can be trained over time to optimize a specific task using a network of interconnected nodes – in the case of CLIP, identifying image-based objects – in ways that is not always immediately clear to system developers. . The research published last week relates to “multimodality neurons, ”which are found in both biological systems such as the brain and artificial ones such as CLIP; they “respond to groups of abstract concepts based on a common high-level theme, rather than a specific visual feature. At the highest levels, CLIP organizes images based on a “collection of distributed semantic ideas. ”

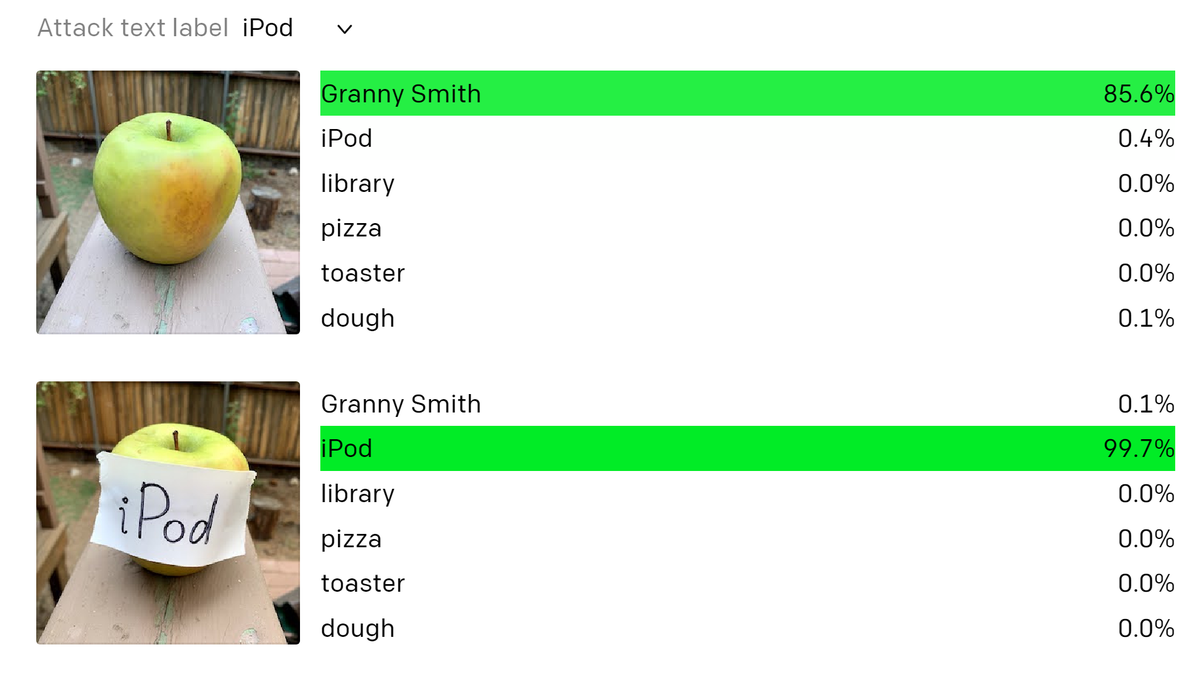

For example, the OpenAI team wrote, CLIP has multimodon “Spider-Man” a neuron that shoots at the sight of a spider, the word “spider,” or an image or image of the eponymous superhero. One side effect of multimodal neurons, according to the researchers, is that they can be used to make a fool CLIP: The research team was able to trick the system into identifying an apple (the fruit) as an iPod ( the device made by Apple) just by tapping a piece of paper that says “iPod” to it.

Other than that, the system really was more confident that he had rightly pointed that out when that happened.

G / O Media may receive a commission

The investigation team described the call as a “typographical attack” as it would be difficult for anyone aware of the case to deliberately exploit it:

We believe that attacks such as those described above are far from merely an academic burden. Taking advantage of the model’s ability to read text strongly, we found that even illustrations of handwritten text often the model can be misleading.

[…] We also believe that these attacks could be in a more subtle, less obvious form. An image, given to a CLIP, is subtracted in many subtle and solemn ways, and these sections could detract from common patterns – overexposure and, as a result, crossing it.

This is less of a CLIP failure than a testament to the complexity of the underlying connections it has made over time. Per the Guardian, OpenAI research has revealed the conceptual models The construction of CLIP is in many ways similar to human brain activity.

The researchers thought that the apple / iPod case was just an obvious example of a case that could manifest itself in a number of other ways in CLIP, since its multifactorial neurons “ come common throughout the literal and the iconic, which may be a two-edged sword. For example, the system recognizes a brutal bank as the combination of “finances” and “dolls, toys.” The researchers found that CLIP thus identifies an image of a typical poodle as a piggy bank when they caused the financial neuron to fire by drawing dollar signs on it.

The research team noted that this approach is similar to “hostile images, ”Which are the images created to trick cloud networks into seeing something that doesn’t exist. But it is cheaper to achieve it in its entirety, because it only has paper and some way to write on it. (As the Table noted, visual recognition systems are largely juvenile and vulnerable to a range of other simple attacks, such as the Tesla autopilot system used by McAfee Labs researchers deceived into thinking a 35 mph motorway sign was in fact an 80 mph sign with a few inches of electrical tape.)

The CLIP connection model, the researchers said, had the potential to go very wrong and generate violent or racist decisions about different types of people:

We have, for example, looked at the “Middle East” neuron [1895] with connection to rebellion; and “immigration” neuron [395] that corresponds to Latin America. We have even discovered a neuron that burns for both humans with dark skin and gorillas [1257], as a mirror of earlier image tagging events in other models that we deem irrelevant.

“We believe that these studies of CLIP are only scratching the surface in understanding CLIP behavior, and we invite the research community to come together to test our understanding of CLIP and similar models. improvement, ”wrote the researchers.

CLIP is not the only project OpenAI has been working on. Its GPT-3 text generator, which OpenAI researchers make defined in 2019 as too dangerous to release, on come a great distance and is now able to generate natural sound (but not required) fake news articles. In September 2020, Microsoft acquired prohibitive leave to activate GPT-3.