We don’t know how much time elapsed between the invention of the wheel and someone putting wheels on their feet, but we think that was a good time to find: combining the ability to move away at speed and ability our foot rapidly changing changing landscape. Now that we have a wide range of leisure wheel shoes, what now? How about teaching robots to skate, too? IEEE Spectrum Interview with [Marko Bjelonic] of ETH Zürich outlines progress by one of many research teams working on the problem.

For many of us, it was the first robot we saw rolling on powered wheels at the ends of legs that was actively deployed when pictures of the Boston Dynamics ‘Handle’ project appeared a few years ago. Going up and down a wide area of terrain and performing occasional jumps, his athleticism caused a huge uptick in robotics circles. But when Handle was introduced as a commercial product, his job was… stacking boxes in a warehouse? That was a disappointment. Warehouse floors are very flat, leaving Handle flexibility unused.

Boston Dynamic has generally been very strict on the details of their robotics development, so we may never know the full story behind Handle. But what they have achieved is certainly making a lot more people think about the control problems involved. Even for humans, we face an irregular learning curve polished with bruised and sometimes broken body parts, and that’s even before we start putting power into the wheels. So there are enough problems to solve, generating a steady stream of research papers describing how robots could master this locomotion method.

Adding to the excitement is the fact that this is becoming an area where reality catches up with fiction, as is thought of with wheel-legged robots in forms like Tachikoma of Ghost in the shell. While these fictional robots have inspired projects ranging from LEGO creatures to 28-servo animals, their wheel and leg movements have not been independently coordinated as they are in this generation of search robots.

As control algorithms evolve in robot search laboratories around the world, we are confident that we will see wheel-footed robots find applications in other fields. This concept is far too cool for stacking boxes in a warehouse.

Keep reading “Legged robots hitting wheels and skating away”

Cameras are becoming smaller and more visible. Now they are hidden under the skin of robots.

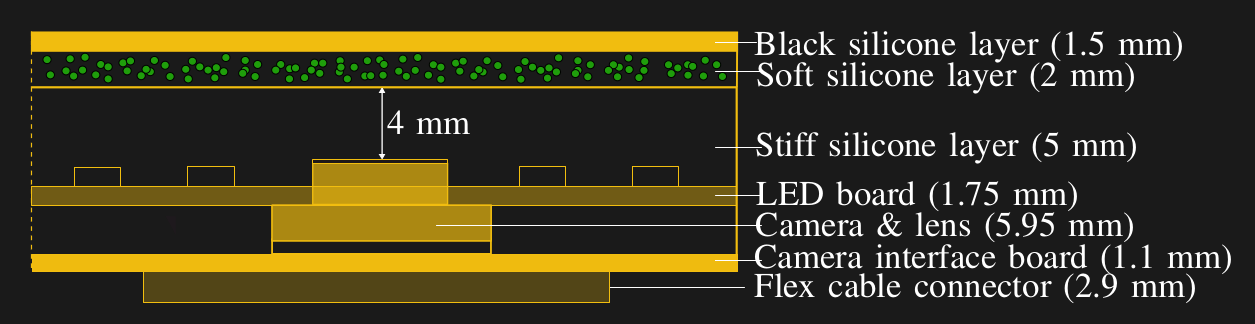

Recently a team of researchers from ETH Zurich in Switzerland has created a multi-camera optical tactile sensor capable of monitoring the surrounding space based on the rotation of a communication force. The sensor uses an up stack containing a camera, LEDs, and three layers of silicone to detect any skin disturbances.

The scheme is modular and in this example it uses four cameras but can be extended from then on. During manufacture, the camera and LED circuit boards are mounted and a solid silicone cover is poured to about 5 mm in thickness. Next a 2 mm cover is poured with spherical particles poured before the final 1.5 mm layer of black silicone is poured. The cameras monitor the grains as they move and use the information to detect the deformation of the material and the force applied to it. The sensor is also capable of reversing the deformation forces and creating a contact force circuit. The demo uses very cheap cameras – Raspberry Pi cameras tested by NVIDIA Jetson Nano Developer Kit – that in total deliver about 65,000 pixels of resolution.

As well as simply providing more information about the forces applied to a surface, the sensor also has a larger contact surface and is thinner than other camera-based systems because it does not require the use of reflective parts. It recycles regularly based on a controversial pre-trained neural network with data from three cameras and updated with data from all four cameras. Possible future applications include soft robots, promoting touch-based sensing with the help of computer vision algorithms.

While self-aware robotic skin may not be on the market as quickly, this certainly opens up the possibility for robots that detect when their structures are over-stressed – the robotic device. equals pain.

Keep reading “Robotic skin can see when (and how) you touch it”

We spend a lot of time here at Hackaday talking about drone incidents and today we are looking into the danger of working in areas where people are present. Accidents do happen, and whether it’s an accidental failure or just a dead battery pack, the chance of a multi-rotor plane crashing down on people below is a real ongoing threat. For non-professional flyers, a ban on operating over a large population is prohibited, but there are cases where donations by a professional pilot fly near a large crowd and other safety measures need to be considered.

We saw a skier lost nearby with a camera drone crash in 2015, and a week or two ago there was news that a postal drone test had been suspended in Switzerland after a parachute system failed. When a multirotor fails in some way when it is in flight it represents multi-kilograms flying weapons a widow equipped with spinning blades, how to make it to the floor in the safest way possible? Does it fall into an uncontrolled flight, or does it activate unsafe technology and take some control as it descends?

Keep reading “Safety systems to prevent unregulated drone crash”

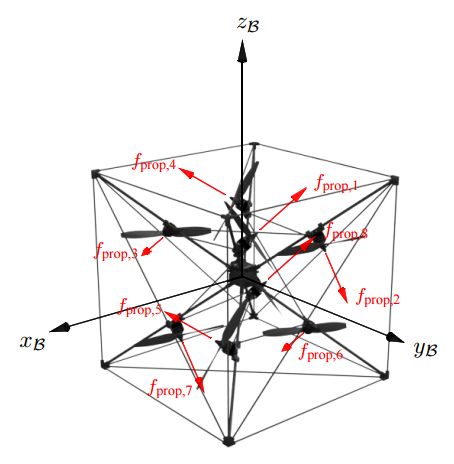

Wouldn’t it be great if you had a flying machine that could move in any direction while turning around any axis while maintaining both thrust and torque? Attach a robot arm and the machine could position itself anywhere and move objects around as needed. [Dario Brescianini] and [Raffaello D’Andrea] of the Institute for Dynamic Systems and Control at ETH Zurich, has come up with their Omnicopter that does just that by using eight rotors in configurations that give it six degrees of freedom. Oh, and it plays a fetch, as seen in the first video below.

It is possible for each propeller to provide a thrush for each side. Also on the vehicle itself is a Pixhawk PX4FMU flight computer, eight motors and motor controllers, an 1800 mAh four-cell LiPo battery, and communication radios. Radio communication is essential because the calculation for the position and outlook is done on a desktop computer, which then sends the desired force and square levels to the vehicle. The desk computer knows the position and direction of the vehicle as they fly it in the Flying Machine Arena, a large room at ETH Zurich with an infrared motion capture system.

The result is a bit more likely to look like gravity would not apply to the Omnicopter. The flying machine can be just playful, as you can see in the first video below where it plays using a tied net to catch a ball. When he returns the ball, he actually moves the net to throw the ball into the thrower’s hand. But you can see that in the video.

Keep reading Flying Omnicopter, Fetching, Helping-Hand

Satellites make many of our daily tasks possible, and technology is constantly evolving by leaps and bounds. Protocol, recently completed by [Arda Tüysüz]The team at Power Electronics Systems Lab ETH Zürich is in collaboration with its Celeroton spinoff, aiming to improve satellite positioning with a high-speed, magnetic-powered motor.

Beginning as a doctoral dissertation work led by [Tüysüz], the motor builds on existing technologies, but has been adapted to a new application – with great impact. Currently, the motion motors on board satellites are operated at low rpm to reduce wear, they must be sealed in a low-nitrogen environment to prevent corrosion of the parts, and the microbes caused by the ball bearings in the motors reducing positioning accuracy. With one fall, this new prototype motor overcomes all of these problems.

Keep reading Modest Motor has revolutionary applications

In a race to produce the cheapest and most efficient 3D full-color material, we believe the Disney Research facility (ETH Zurich and the Geometry Interactive Lab) may be the key. Combining hydrographic printing techniques with plastic thermoforming.

You may remember our article last year about creating photorealistic images on 3D objects using a method called hydrographic printing, where you basically print a smooth 3D image that using a regular printer on special paper to transfer it to a 3D object in a water bath. This is basically the same thing, but instead of using the hydrographic printing method, they have combined the smooth image movement with thermoforming – which is like an obvious solution!

Keep reading “Creating full color images of thermoformed parts”